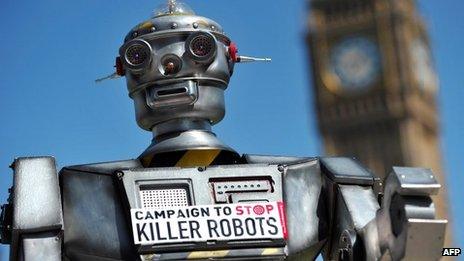

UN mulls ethics of 'killer robots'

- Published

So-called killer robots are due to be discussed at the UN Human Rights Council, meeting in Geneva.

A report presented to the meeting will call for a moratorium on their use while the ethical questions they raise are debated.

The robots are machines programmed in advance to take out people or targets, which - unlike drones - operate autonomously on the battlefield.

They are being developed by the US, UK and Israel, but have not yet been used.

Supporters say the "lethal autonomous robots", as they are technically known, could save lives, by reducing the number of soldiers on the battlefield.

But human rights groups argue they raise serious moral questions about how we wage war, reports the BBC's Imogen Foulkes in Geneva.

They include: Who takes the final decision to kill? Can a robot really distinguish between a military target and civilians?

Is a ban needed to prevent robots that can decide when to kill?

If there are serious civilian casualties, they ask, who is to be held responsible? After all, a robot cannot be prosecuted for a war crime.

"The traditional approach is that there is a warrior, and there is a weapon," says Christof Heyns, the UN expert examining their use, "but what we now see is that the weapon becomes the warrior, the weapon takes the decision itself."

The moratorium called for by the UN report is not the complete ban human rights groups want, but it will give time to answer some of those questions, our correspondent says.

- Published23 April 2013

- Published4 March 2013

- Published13 April 2013

- Published8 February 2013

- Published3 February 2013